Introduction

OpenAI has recently unveiled its newest addition to the GPT-3 family of natural language models: ChatGPT (and it is much more capable than previous models). Almost immediately people found it could be used for everything -- from writing Seinfeld scripts to hallucinating a fully-fledged Linux environment. Closer to home on the cyber security front, it can even identify and create exploits for novel code. So, why not see if it can empower incident responders?

ChatGPT is an iterative dialogue application built on top of the GPT-3 text-to-text model, which means that given a textual input, it will produce a textual output. Naturally, we asked ourselves, “What in the incident response process requires such an operation?”.

At Cado, we’ve built an engine that automates forensic data capture, processing and analysis to expedite incident response in cloud, container and serverless environments. While the Cado platform automatically surfaces key details related to an incident including root cause, compromised systems and roles and more, we wanted to see what additional conclusions ChatGPT could surface.

Setting up the Experiment

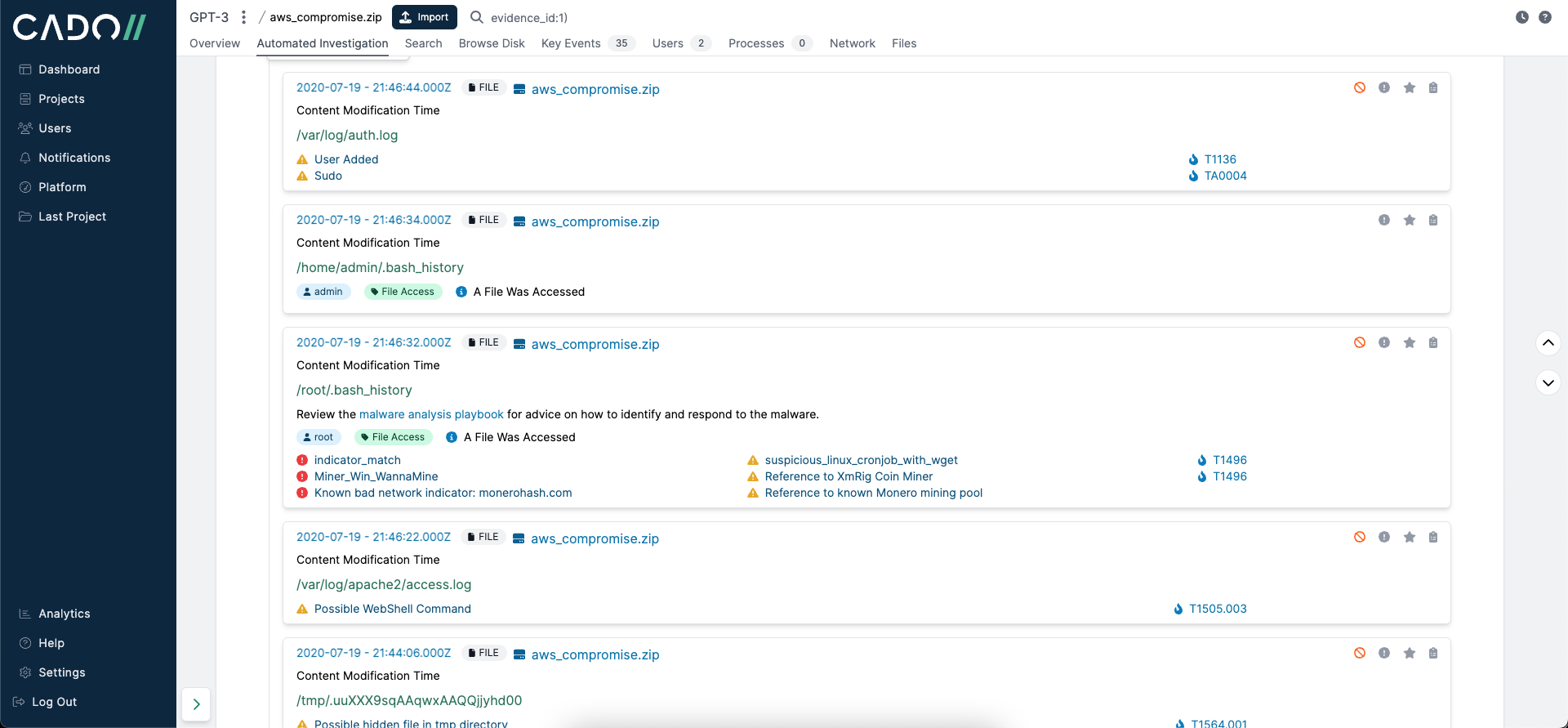

First, we processed a compromised EC2 system running in AWS using the Cado Platform:

Moving to the Automated Investigation tab, Cado surfaces the most important events related to the incident. These key events help analysts seamlessly kick off and pivot their investigation.

To see what type of investigative conclusions ChatGPT could draw without human intervention, we exported these key events to JSON format:

Getting a Report from ChatGPT

Along with submitting the above JSON data, we wrote the following prompt in OpenAI ChatGPT:

[

{

"alarm_information": [

{

"attack": "TA0004",

"description": "Sudo",

"severity": 3

}

],

"evidence_id": 1,

"evidence_name": "aws_compromise.zip",

"extra": "",

"filename": "/var/log/auth.log",

"first_day": false,

"format": "syslog",

"host": "ip-172-31-78-104",

"id": "Y_4RB4UBwZt3WiNd6xM5",

"index_id": 1,

"inode": "-",

"is_folder": false,

"macb": "M...",

"notes": "-",

"query_suggestions": [],

"sha256": "7aaf91c92fd6152e30a1805219f07ab5d3df289e97f078a7afa0775ea0d20fe5",

"short": "[sudo] root : TTY=pts/0 ; PWD=/home/admin ; USER=root ; COMMAND=/usr/bin/systemctl restart apache2",

"source": "LOG",

"sourcetype": "Log File",

"tag": null,

"timestamp": 1658261666,

"type": "Content Modification Time",

"user": null

},

…

]

Events:

(JSON Data from Above)

In response, ChatGPT returned a fairly convincing triage report of the incident:

1. An unauthorised user gained access to the system using the "Sudo" attack (ID: TA0004) and executed the command "/usr/bin/systemctl restart apache2" (Event ID: CnjI4YQBfslkQnwL65kB)2. The user then installed PHP and the Apache2 module for PHP using the command "/usr/bin/apt install php libapache2-mod-php" (Event ID: RHjI4YQBfslkQnwL6Zgv)

3. The user then installed PHP7.2, the Apache2 module for PHP7.2, and PHP-MySQL using the command "/usr/bin/apt install php7.2 libapache2-mod-php7.2 php-mysql" (Event ID: QnjI4YQBfslkQnwL6Zgv)

4. The user then created a new user named "hacker" using the command "/usr/sbin/useradd -m hacker" (Event ID: OHjI4YQBfslkQnwL6Zgv)

5. The user then added the user "hacker" to the sudoers group using the command "/usr/sbin/usermod -aG sudo hacker" (Event ID: hHjI4YQBfslkQnwL6Zgv)

6. The user then changed the password for the user "hacker" using the command "/usr/bin/passwd hacker" (Event ID: WnjI4YQBfslkQnwL6Zgv)

7. The user then accessed the system as the user "hacker" and executed the command "/usr/bin/nano /etc/ssh/sshd_config" to edit the SSH configuration (Event ID: cHjI4YQBfslkQnwL6Zgv)

8. The user then restarted the SSH service using the command "/usr/sbin/service ssh restart" (Event ID: PHjI4YQBfslkQnwL6Zgv)

Careful Now

While this gives a decent explanation of what happened and provides an analyst with a quick human-readable explanation of the incident – it’s not 100% accurate. Because we have the raw system events available to us within the Cado platform, we can quickly verify the output from ChatGPT. For example, within the Cado platform we can see that the username “hacker” was not actually involved in the incident -- that was the model’s own invention. This is a common concern with ChatGPT that OpenAI raised themselves -- it can hallucinate, and when it does hallucinate, it does so with confidence:

Another potential concern is privacy. It is certainly possible to run fine-tuned GPT models locally, but the most powerful models will remain API driven for some time. And that means sending data across to a third party.

Shorter Investigations

Before moving on, we wanted to try one more thing. Instead of submitting all of the events within Cado’s Automated Investigation tab, we submitted only the highlights from each key event to see how ChatGPT would interpret it:

<SAME PROMPT AS BEFORE>

Events:

*** WebShell Activity ***

Detected: 7

- Webshell File (1)

- Webshell Event (6)*** Malware Installation ***

Detected: 1

- Suspicious Download from Pastebin (1)*** Persistence ***

Detected: 26

- Linux Cronjob File (5)

- Cron Event (13)

- File hiding in Temporary Directory (6)

- User Added (2)*** Command and Control ***

Detected: 2

- Communication over Tor (2)*** Privilege Escalation ***

Detected: 12

- Sudo access as Root (12)*** Anti-Forensics ***

Detected: 1

- Linux Log Cleaner (1)

While the result was a bit less informative than before, it was definitely still helpful (and more accurate):

Based on the events recorded, it appears that the system was compromised in the following manner:

- A webshell file was detected (event ID 1).

- The webshell was used to carry out several events (event IDs 6).

- A suspicious download from Pastebin was detected (event ID 1). This likely contained the malware that was installed on the system.

- The malware attempted to establish persistence on the system by creating a Linux cronjob file (event ID 5), scheduling cron events (event ID 13), hiding files in the temporary directory (event ID 6), and adding a new user (event ID 2).

- The malware communicated with its command and control server over Tor (event ID 2).

- The malware attempted to gain elevated privileges on the system by using sudo access as the root user (event ID 12).

- Finally, the malware attempted to clean the Linux logs to cover its tracks (event ID 1).Overall, it appears that the system was successfully compromised and the attacker carried out various actions to maintain access and evade detection.

Final Thoughts

While we only briefly played with ChatGPT, we can clearly see the potential benefits artificial intelligence can offer when optimized for the specific incident response use case, such as:

- Improved Mean Time to Resolution (MTTR)

- Reduced skill requirements for the analyst; and

- Enhanced clarity for executives to make more informed decisions in the event of an incident.

However, until further optimized for this particular use case, there is still a lot of manual work that needs to be done by an analyst to confirm reported findings. Moreover, other potential issues that should be considered include security and legal concerns. For example, OpenAI states that only public data should be submitted and that private APIs and models will be available in the future. However, when it comes to incident data, there is a lot of sensitive information, such as staff numbers, email addresses, medical information, student records, IP addresses, usernames, and passwords.

At Cado, we are on the forefront of technological advancement for the purpose of empowering organizations to expedite incident response. If you're interested in seeing how Cado can help augment your current incident process in the cloud, get in touch or try out our 14-day free trial.

Given we saw promising benefits when we first started experimenting with ChatGPT for Incident Response, we've since introduced a new beta feature: an interactive Q&A interface to streamline the analysis of forensic evidence.