The Problem

We built Cado Response to analyse data deeply. We'll happily churn through a 1TB disk image to find the needle in the forensic haystack.

But the architecture you need to go deep is different to the architecture you need to go wide.

Going wide is about scaling to millions of logs or endpoints, and being able to analyse the data in a reasonable time frame. When responding to incidents in the cloud - you often need to both analyse large sets of logs and dive in deep on individual systems.

Existing Approaches to Searching Logs in the Cloud

The most obvious first approach is to search through your logs in a SIEM. If the data is already in your SIEM and you're in a position to do this - that's fantastic. SIEMs and Log Analysis tools are built to go wide. But they can also be expensive. Things are improving here fast though. Modern SIEM’s often use a cheaper “indexless” approach to search larger amounts of data at less cost. Or they even keep logs in place to reduce the cost of moving them.

If you are in AWS, you can use Athena to parse and search through your logs in S3. This is a great option as it can parse data that can be queried in a SQL like nature. However, it can be expensive if you have a lot of data and the interface is clunky at best. The good folk over at Invictus-IR have done some work extending this to support Sigma rules for detection. Another option within AWS, is to use the new Mountpoint for S3. This lets you treat S3 as a filesystem, and can use tools such as grep to search data.

In Azure most people will default to Sentinel, and Chronicle in GCP. But both have some limits to how far they will search.

Going wide with cloudgrep

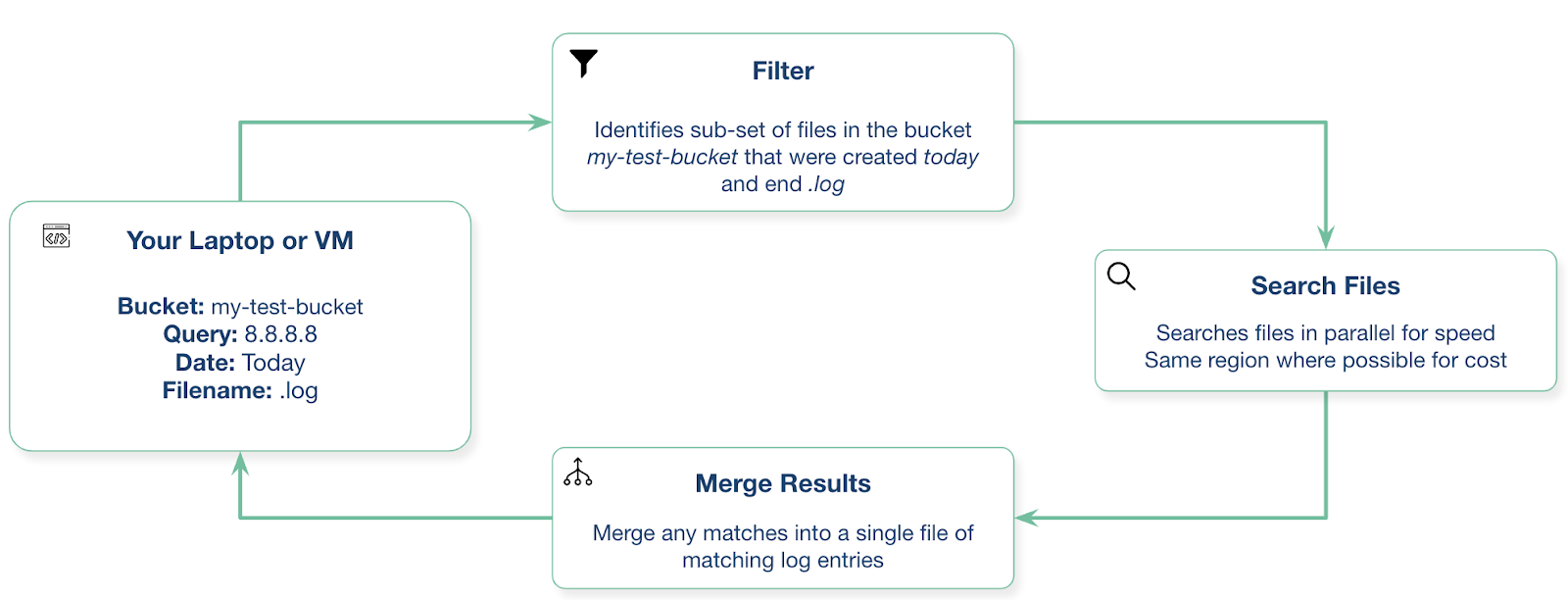

In search of a simpler approach, we've recently open-sourced a tool named cloudgrep. Cloudgrep is a tool that allows you to search logs in the cloud storage. It lets you search across cloud storage in AWS, Azure and GCP. It will search inside compressed files, and even binary data. It searches files in parallel for speed:

You can then export the results to a smaller log file for import into other solutions such as Cado Response. A simple example is:

./cloudgrep --bucket test-s3-access-logs --query 9RXXKPREHHTFQD77 > combined.logs

A more complex example, which efficiently filters down the logs to be searched based on the logs filename and modification date, is:

./cloudgrep -b test-s3-access-logs --prefix "logs/" --filename ".log" -q 9RXXKPREHHTFQD77 -s "2023-01-09 20:30:00" -e "2023-01-09 20:45:00" --file_size 10000

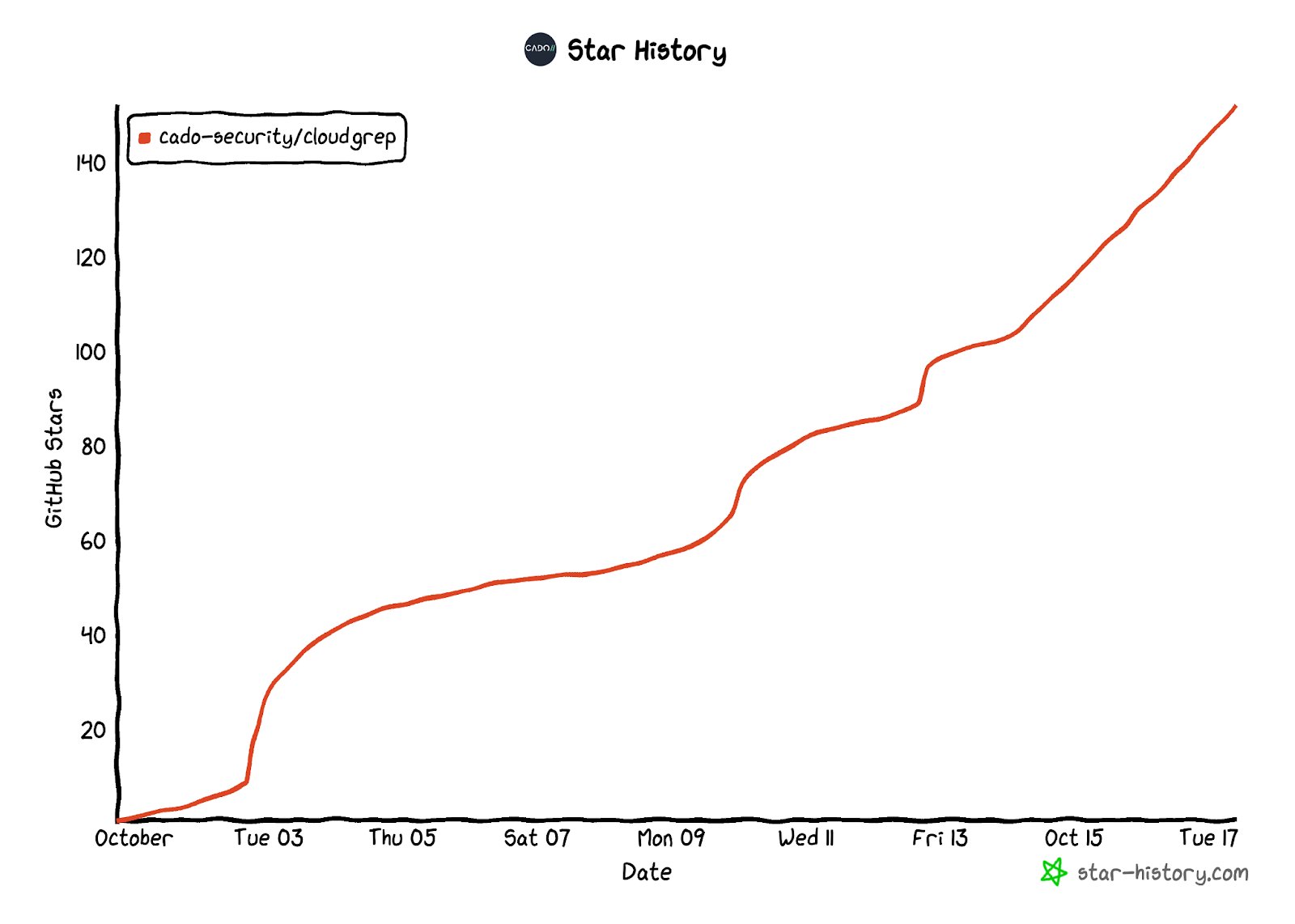

We’re excited to see the response that cloudgrep has had. It’s been less than a month, and the project has already had over 150 200 Github stars and thousands of visitors:

For more on how to use cloudgrep to search logs in cloud storage, please see the Github repository.